gaussian splatting 中的数学公式

gaussian splatting 中的数学公式

这里主要标记一部分cuda代码,和一些参数的说明。

参考资料

https://zhuanlan.zhihu.com/p/680669616

EWA Splatting

3D Gaussian Splatting for Real-Time Radiance Field Rendering

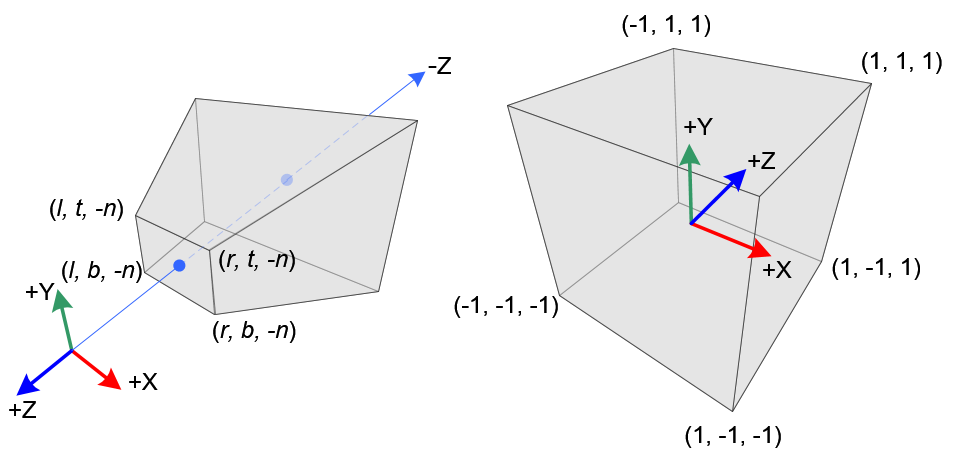

splatting 的流程

Gaussian 球表示的三维模型,具有颜色(球谐参数),世界系下的位置 means3D ,姿态 rotations(四元数), 沿着轴的尺度 scales。渲染过程会先计算每个 Gaussian 球在像素平面下的投影,计算投影的覆盖区域,然后建立每个像素点和覆盖它的高斯球的索引,最后对每个像素点进行渲染的计算过程。

1 | // Forward rendering procedure for differentiable rasterization |

1 | // int CudaRasterizer::Rasterizer::forward( |

forward 调用 preprocess

1 | __global__ void preprocessCUDA(int P, int D, int M, |

在preprocess 首先检查高斯球是否过近,以及计算在NDC 系下的坐标?

1 | // if (!in_frustum(idx, orig_points, viewmatrix, projmatrix, prefiltered, p_view)) |

1 | __forceinline__ __device__ float3 transformPoint4x3(const float3& p, const float* matrix) |

这里的projmatrix是什么呢?一路往外找。

1 | def setup_camera(w, h, k, w2c, near=0.01, far=100): |

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 The world need more Miku!